[ If you only want to see code, just scroll down ]

Motivation

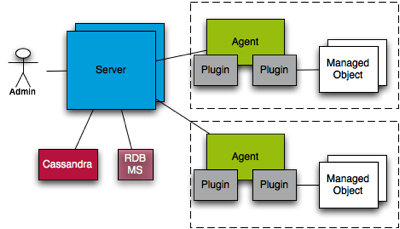

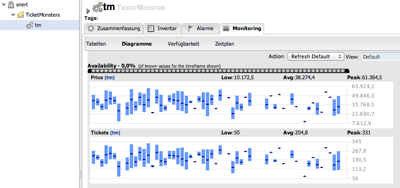

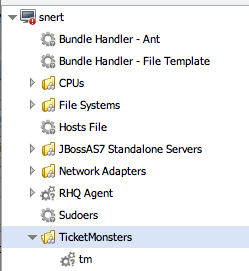

In

RHQ we had a need for a security domain that can be used to secure the REST-api and its web-app via container managed security. In the past I had just used the classical

DatabaseServerLoginModule to authenticate against the database.

Now does RHQ also allow to have users in LDAP directories, which were not covered by above module. I had two options to start with:

- Copy the LDAP login modules into the security domain for REST

- Use the security domain for the REST-api that is already used for UI and CLI

The latter option was of course favorable to prevent code duplication, so I went that route. And failed.

I failed because RHQ was on startup dropping and re-creating the security domain and the server was detecting this and complaining that the security domain referenced from the rhq-rest.war was all of a sudden gone.

So next try: don't re-create the domain on startup and only add/remove the ldap-login modules (I am talking about modules, because we actually have two that we need).

This also did not work as expected:

- The underlying AS sometimes went into reload needed mode and did not apply the changes

- When the ldap modules were removed, the principals from them were still cached

- Flushing the cache did not work and the server went into reload-needed mode

So what I did now is to implement a login module for the rest-security-domain that just delegates to another one for authentication and then adds roles on success.

This way the rhq-rest.war has a fixed reference to that rest-security-domain and the other security domain could just be handled as before.

Implementation

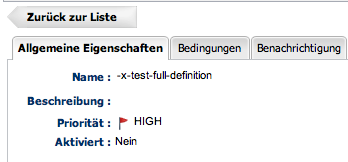

Let's start with the snippet from the standalone.xml file describing the security domain and parametrizing the module

<security-domain name="RHQRESTSecurityDomain" cache-type="default">

<authentication>

<login-module code="org.rhq.enterprise.server.core.jaas.DelegatingLoginModule" flag="sufficient">

<module-option name="delegateTo" value="RHQUserSecurityDomain"/>

<module-option name="roles" value="rest-user"/>

</login-module>

</authentication>

</security-domain>

So this definition sets up a security domain

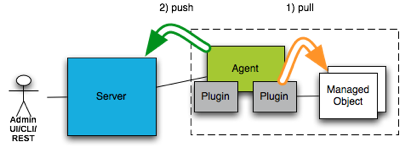

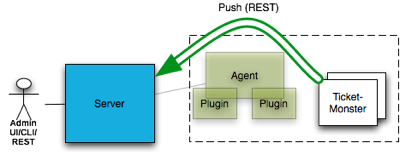

RHQRESTSecurityDomain which uses the DelegatingLoginModule that I will describe in a moment. There are two parameters passed:

- delegateTo: name of the other domain to authenticate the user

- roles: a comma separated list of modules to add to the principal (and which are needed in the security-constraint section of web.xml

For the code I don't show the full listing;

you can find it in git.

To make our lives easier we don't implement all functionality on our own, but extend

the already existing

UsernamePasswordLoginModule and only override

certain methods.

public class DelegatingLoginModule extends UsernamePasswordLoginModule {

First we initialize the module with the passed options and create a new LoginContext with

the domain we delegate to:

@Override

public void initialize(Subject subject, CallbackHandler callbackHandler,

Map<String, ?> sharedState,

Map<String, ?> options)

{

super.initialize(subject, callbackHandler, sharedState, options);

/* This is the login context (=security domain) we want to delegate to */

String delegateTo = (String) options.get("delegateTo");

/* Now create the context for later use */

try {

loginContext = new LoginContext(delegateTo, new DelegateCallbackHandler());

} catch (LoginException e) {

log.warn("Initialize failed : " + e.getMessage());

}

The interesting part is the

login() method where we get the username / password and store it for later, then we try to log into the delegate domain and if that succeeded we tell super that we had success, so that it can do its magic.

@Override

public boolean login() throws LoginException {

try {

// Get the username / password the user entred and save if for later use

usernamePassword = super.getUsernameAndPassword();

// Try to log in via the delegate

loginContext.login();

// login was success, so we can continue

identity = createIdentity(usernamePassword[0]);

useFirstPass=true;

// This next flag is important. Without it the principal will not be

// propagated

loginOk = true;

the

loginOk flag is needed here so that the superclass will call

LoginModule.commit() and pick up the principal along with the roles.

Not setting this to true will result in a successful

login() but no principal

is attached.

if (debugEnabled) {

log.debug("Login ok for " + usernamePassword[0]);

}

return true;

} catch (Exception e) {

if (debugEnabled) {

LOG.debug("Login failed for : " + usernamePassword[0] + ": " + e.getMessage());

}

loginOk = false;

return false;

}

}

After success, super will call into the next two methods to obtain the principal and its roles:

@Override

protected Principal getIdentity() {

return identity;

}

@Override

protected Group[] getRoleSets() throws LoginException {

SimpleGroup roles = new SimpleGroup("Roles");

for (String role : rolesList ) {

roles.addMember( new SimplePrincipal(role));

}

Group[] roleSets = { roles };

return roleSets;

}

And now the last part is the Callback handler that the other domain that we delegate to will use to obtain the credentials from us. It is the classical JAAS login callback handler. One thing that first totally confused me was that this handler was called several times during login and I thought it is buggy. But in fact the number of times it is called corresponds to the number of login modules configured in the

RHQUserSecurityDomain.

private class DelegateCallbackHandler implements CallbackHandler {

@Override

public void handle(Callback[] callbacks) throws IOException, UnsupportedCallbackException {

for (Callback cb : callbacks) {

if (cb instanceof NameCallback) {

NameCallback nc = (NameCallback) cb;

nc.setName(usernamePassword[0]);

}

else if (cb instanceof PasswordCallback) {

PasswordCallback pc = (PasswordCallback) cb;

pc.setPassword(usernamePassword[1].toCharArray());

}

else {

throw new UnsupportedCallbackException(cb,"Callback " + cb + " not supported");

}

}

}

}

Again, the full code is available in the

RHQ git repository.

Debugging (in EAP 6.1 alpha or later )

If you write such a login module and it does not work, you want to debug it. Started with the usual means to find out that my

login() method was working as expected, but login just failed. I added print statements etc to find out that the

getRoleSets() method was never called. But still everything looked ok. I did some googling and found this

good wiki page. It is possible to tell a web app to do audit logging

<jboss-web>

<context-root>rest</context-root>

<security-domain>RHQRESTSecurityDomain</security-domain><disable-audit>false</disable-audit>

This flag alone is not enough, as you also need an appropriate logger set up, which is explained on

the wiki page. After enabling this, I saw entries like

16:33:33,918 TRACE [org.jboss.security.audit] (http-/0.0.0.0:7080-1) [Failure]Source=org.jboss.as.web.security.JBossWebRealm;

principal=null;request=[/rest:….

So it became obvious that the login module did not set the principal. Looking at the code in the superclasses then led me to the

loginOk flag mentioned above.

Now with everything correctly set up the autit log looks like

22:48:16,889 TRACE [org.jboss.security.audit] (http-/0.0.0.0:7080-1)

[Success]Source=org.jboss.as.web.security.JBossWebRealm;Step=hasRole;

principal=GenericPrincipal[rhqadmin(rest-user,)];

request=[/rest:cookies=null:headers=authorization=user-agent=curl/7.29.0,host=localhost:7080,accept=*/*,][parameters=][attributes=];

So here you see that the principal

rhqadmin has logged in and got the role

rest-user assigned, which is the one matching in the security-constraint element in web.xml.

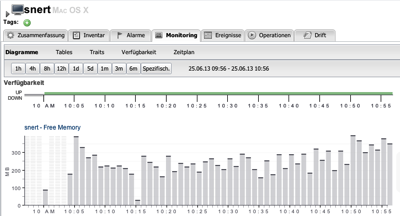

Further viewing

I've presented the above as a

Hangout on Air. Unfortunately G+ muted me from time to time when I was typing while explaining :-(

After the video was done I got a few more questions that at the end made me rethink the startup phase for the case that the user has a previous version of RHQ installed with LDAP enabled. In this case, the installer will still install the initial DB-only RHQUserSecurityDomain and then in the startup bean

we check if a) LDAP is enabled in system settings and b) if the login-modules are actually present.

If a) matches and they are not present we install them.

This Bugzilla entry also contains more information about this whole story.